Let’s take a look at the summary of that DocumentArray with indexed_docs.summary() :Īnd now let’s check out some of those chunks with indexed_() : Indexed_docs contains just one Document (based on rabbit.pdf), containing text chunks and image chunks: We’ll use om_files() so we can just auto-load everything from one directory.Īfter feeding our DocumentArray into the Flow we’ll have the processed DocumentArray stored in indexed_docs.

#Pdf search engine pdf

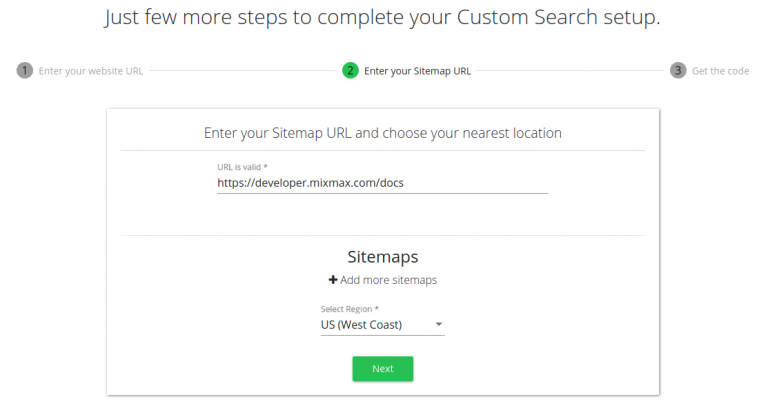

In Jina, each piece of data (be it text, image, PDF file, or whatever) is a Document, and a DocumentArray is just a container for these. We’ll feed in our PDFs in the form of a DocumentArray. add(uses= "jinahub+sandbox://PDFSegmenter", install_requirements= True, name= "segmenter") With Jina Sandbox we can even run it in the cloud so we don’t have to use local compute: from docarray import DocumentArrayĭocs = om_files( "data/*.pdf", recursive= True) In our Flow we’ll start with just one Executor, PDFSegmenter, which we’ll pull from Jina Hub. These are chained together, so any Document fed into one end will be processed by each Executor in turn and then the processed version will pop out of the other end. A Flow refers to a pipeline in Jina that performs a “big” task, like building up a searchable index of our PDF documents, or searching through said index.Įach Flow is made up of several Executors, each of which perform a simpler task. Now that we have our PDF, we can run it through a Jina Flow (using a Hub Executor) to extract our data. Be sure to let us know your own personal footgun collection! Extracting text and images Of course, for your own use case you may be searching PDFs you didn’t create yourself.

#Pdf search engine full

Headers, footers, etc should be disabled, otherwise our index will be full of page 4/798 and similar.I’ve got enough potential PDF headaches to last a lifetime, so to be safe I used the Chrome version. In my experience Firefox tried to be fancy about glyphs, turning scientific into scientific. Firefox and Chrome create PDFs that are slightly different.These ones just involve generating a PDF to work with! Rest assured you will find many of them when you attempt to work with PDFs. FootgunsĪ footgun is a thing that will shoot you in the foot. Let’s just print-to-PDF an entry from Wikipedia, in this case the “ Rabbit” article: Getting our PDFįirst, we need a sample file. I went with the first option since I didn’t want to shave too many yaks. Or something like this with similar tools. Convert the PDF to HTML using something like Pandoc, extracting images to a directory and then converting the HTML to text using Pandoc again.Screenshot every page of the PDF with ImageMagick and OCR it.Take a PDF file and use Jina Hub’s PDFSegmenter to extract the text and images into chunks.We may throw in a few others tools along the way for certain processing tasks, but the ones above are the big three. Instead we can just pull them from the cloud. This is just a rough and ready roadmap - so stay tuned to see how things really pan out.Įxecutor Hub - so we don’t have to build every single little processing unit. Finally we’ll look at some other useful tasks, like extracting metadata.

#Pdf search engine how to

Next we’ll look at how to search through that index using a client and Streamlit frontend.After extracting our PDF’s text and images, CLIP will generate a semantically-useful index that we can search by giving it an image or text as input (and it’ll understand the input semantically, not just match keywords or pixels). For the next post we’ll look at feeding these into CLIP, a deep learning model that “understands” text and images.In this post we’ll cover how to extract the images and text from PDFs, process them, and store them in a sane way.This will be part 1 of 3 posts that walk you through creating a PDF neural search engine using Python: I know several folks already building PDF search engines powered by AI, so I figured I’d give it a stab too. With neural search seeing rapid adoption, more people are looking at using it for indexing and searching through their unstructured data. Alternative title: the joys and headaches of trying to process Turing-complete file formats

0 kommentar(er)

0 kommentar(er)